Though some would argue that the data-centric perspective stands apart on its own as a process modeling technique, I believe this approach provides more value in establishing a unique view from within a holistic BPM methodology. The various views or perspectives, each then building upon the other towards the most complete meaning or reflection of business function and enterprise.

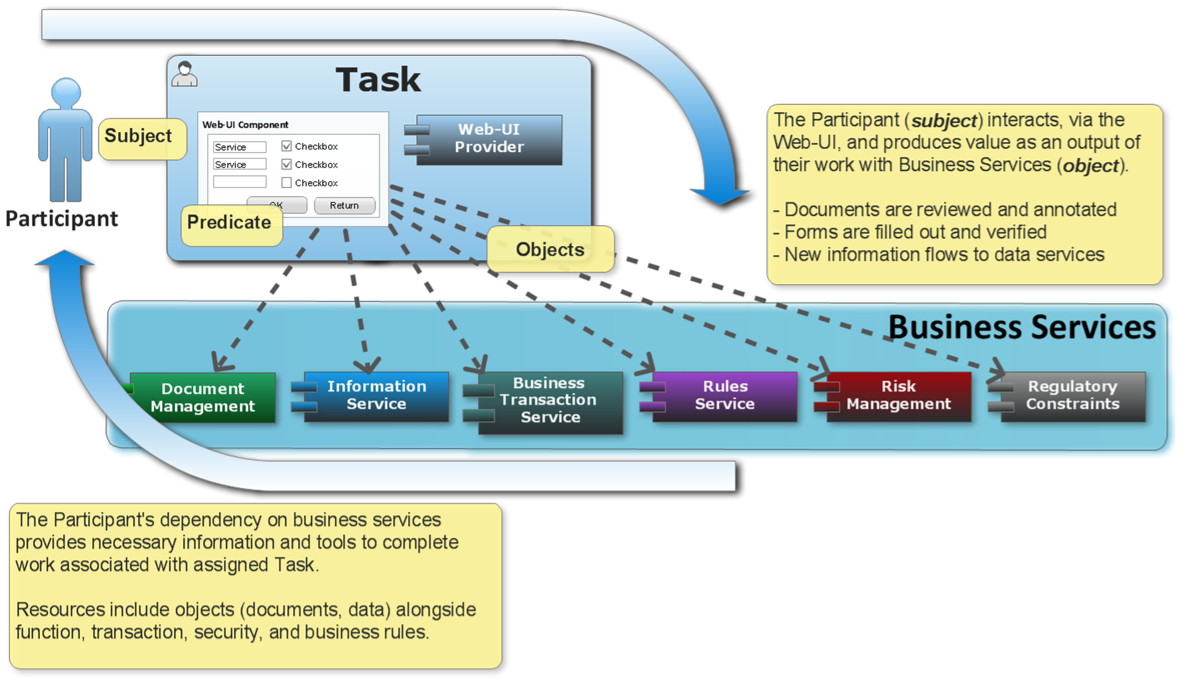

The data-centric perspective is then a process modeling technique where requirements shift conversation toward objects and data. Design takes on advanced topics of user interface, documents, event management, and integration.

Where Does The Data-centric View Fit within BPM?

From a methodology perspective, the order of focus is:

- Process-centric: Process flows are initially defined with sufficient details supporting both application and data-centric views.

- Application view: This is basically the integration view without yet including schema or domain details (which are constructed in the following phase). Applications and services are represented as participants in process flows. This can also be considered the Subject-oriented perspective given its focus on actors (subject) and necessary coordination (integration)

- Data-centric: This perspective builds from both process and application views. A data-oriented approach focuses on enabling object visibility between system agents. Data changes dynamically effect progress and composition. “Passive participants” (objects) take a more leading role in the flow and execution of business process [1] so that both data and context are shared between activities and sub-components (e.g. “networked”).

Don’t forget that our methodology iterates towards completion – NO SINGLE “view” or “approach” necessarily completes prior to the next. This means, for example, that we could leave an initial process model (view) incomplete while advancing onto application and data-centric views.

The Value Behind Data-centric BPM

- Data Access – Avoid data-context tunneling. Activity and data models (business domain) must bridge between tasks and sub-systems so that they are available to all necessary participants [2].

- Data-state Reaction – Process, activity, and sub-systems have visibility and can react to both data state changes and constraint events [1] .

- Object-instance Coordination – Process and activity instances support synchronization and both vertical and horizontal aggregation. Additionally, asynchronous coordination insures minimal coupling for proper transaction management and rule execution [3].

- Data-oriented Granularity – Atomicity and composition of process and activity instances based on process data so that fine-grained, cross-cutting aggregates (or composites) can be safely assembled and executed in-batch while respecting requisite rules and constraints [3].

- Data Integrity – Application and business domain data integrity safeguarded and insured from process execution through application, service, and persistence layers (sub-systems) [3].

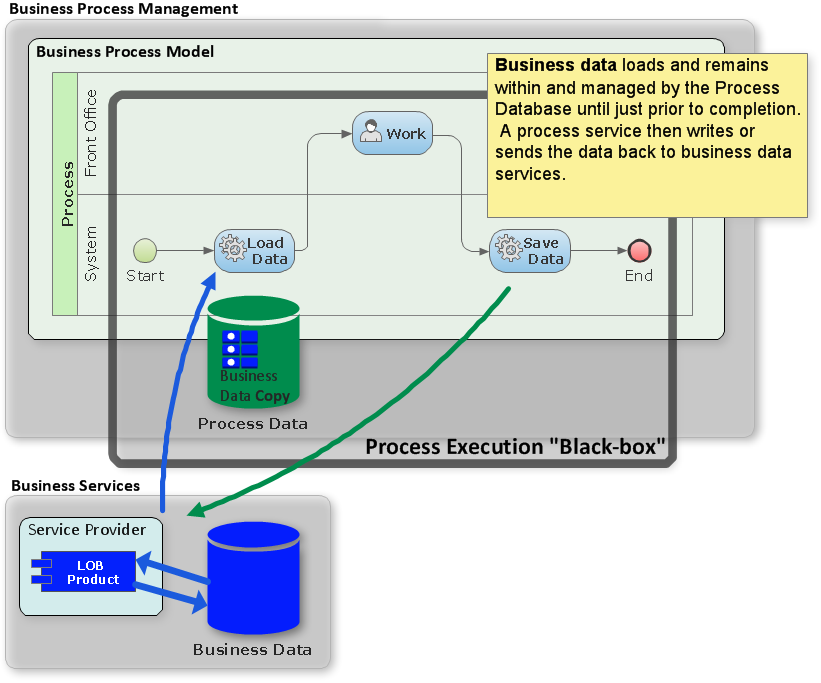

The Process-centric “Black-box” of Data Management

This sort of data management is referred to as the “Black-box”[4] due to limited interaction with business data services during process execution.  While this is both a preferred and perfectly acceptable approach at managing data during our initial Process-centric phase, this may not be the most ideal method for handling volatile information. The reason is that business data may fall out-of-synch if other, external applications attempt modifications on the source-of-record (business database) while its copy resides within the process execution context.

While this is both a preferred and perfectly acceptable approach at managing data during our initial Process-centric phase, this may not be the most ideal method for handling volatile information. The reason is that business data may fall out-of-synch if other, external applications attempt modifications on the source-of-record (business database) while its copy resides within the process execution context.

A process, for the most part, does not apply locks on business(domain) data due to its affinity for long running transactions. This means there is a good chance for integrity violations if another application attempts updates to our source-data prior to process completion.

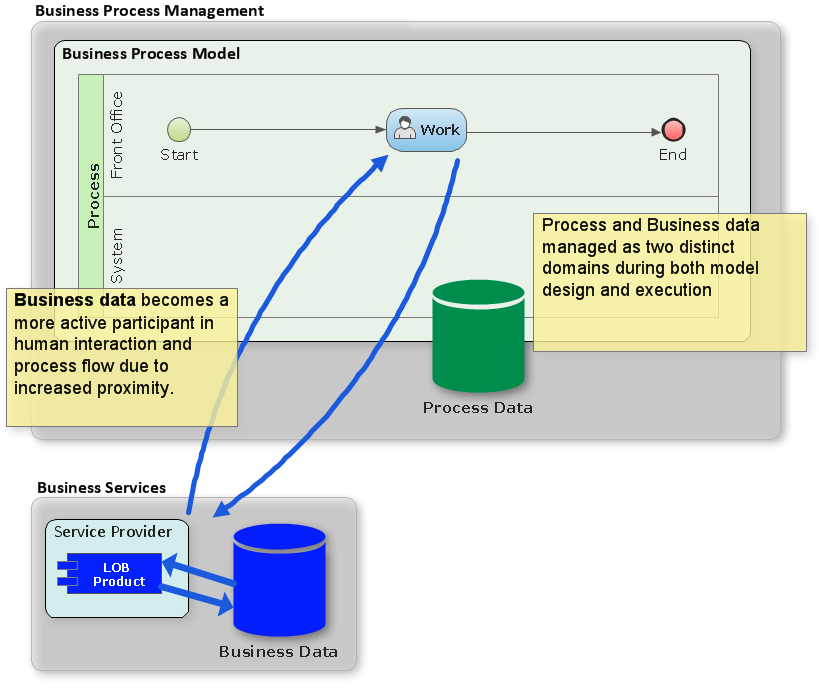

The Object-centric View on Data Management

Business data is brought into close proximity with human interaction and process execution. Reduced latency and increased cross-system visibility (concurrency) helps reduce the risk of resource contention during long-running transactions.

With an architecture now leaning towards object-centered requirements, we’re now looking at the following features:

- Alert user to context related events (state change)

- Register and listen for infrastructure (data-source, transaction) callback/event notification

- Asynchronous communication between user interface and dependent sub-systems (e.g. web-sockets)

- Manage form/field representation (read-only, editable, etc.)

- Support communication between process and service

- Support both object query and sets for presentation and management

While not exactly solving our transaction related issues, we are bringing in additional technology and creative workarounds to help break up a potentially unmanageable long transaction into its smaller sub-parts.

References

[1] Rui henriques, Antonio Silva. Object-Centered Process Modeling: Principles to Model Data-Intensive Systems. Business Process Management Workshops (2010)

[2] Wil van der Aalst, Mathias Weske, Dolf Grunbauer. Case handling: A new paradigm for business process support. Data and Knowledge Eng. 53 (2005)

[3] Vera Kunzle, manfred Reichert. Towards object-aware process management systems: Issues, challenges, benefits. In: Enterprise, BP and IS Modeling. LNBIP, vol. 29, pp.197–210. Springer, Heidelberg (2008)

[4] Künzle, Weber, Reichert, Manfred. Object-aware Business Processes: Properties, Requirements, Existing Approaches (2010)